In Part 1 of this article, I described the structure and requirements for making an app, a “Skill”, for the Amazon Echo. Now it’s time to put that into practice and get into the code for a fully-functional Skill.

For this project, I wanted to be able to ask the Echo about new shows on my Kodi media center. Thanks to PVR technology, we’ve completely lost track of the schedule for new TV show episodes. So typical questions around the house include, “do we have anything to watch?” and “do we have a new episode of Modern Family?” Let’s get the Echo to answer those questions!

What Are We Working With?

The whole point is to make the Echo fit into your home automation setup, which is almost certainly different from mine. So here’s a complete outline of the setup that was used for this article. Where your setup differs from mine, you can use the concepts and code here as a starting point to make it work the way you want it to.

- Kodi media center installed on a mini PC (like one of these) using the Kodibuntu installation.

- The Kodi JSON-RPC API, which just needs to be enabled in Kodi’s system configuration options.

- A second Linux server running Apache with mod_wsgi which is accessible from the Internet.

- Python 2.7 and the python-requests library.

- A self-signed certificate for running SSL. Free and compatible with the Alexa Skills Kit test mode.

- The Alexa Skills Kit documentation and the Developer Console.

- The code for our Skill, from the Maker Musings GitHub repository.

OK, that’s certainly enough links for now.

Accessing the Kodi API

One of the files in the GitHub repository is kodi.py, which is a simple wrapper module around parts of the Kodi JSON-RPC API. Find these lines:

# Change this to the IP address of your Kodi server or always pass in an address KODI = '192.168.5.31' PORT = 8080

and change the address for KODI to be correct for your setup. Once that’s done, you can test that you can use the API from Python. If it doesn’t work, make sure that your Kodi configuration is set to permit control of Kodi via HTTP. We’re going to be using the GetUnwatchedEpisodes method. Try the code snippet below to see the kinds of information it provides.

import kodi print kodi.GetUnwatchedEpisodes()

Designing The Voice Interaction

We’d like to be able to ask the Echo about new episodes of TV shows that are ready to be watched. There are three slightly different types of questions we’d like the Echo to be able to answer

- “Do we have any new shows to watch?” Although this is a yes/no question, we’ll have the Echo provide a little more information about the shows.

- “What new shows do we have?” When asked this, we’ll have the Echo list the names of all the shows that have new, unseen episodes.

- “Do we have any new episodes of <show name>?” When you ask the Echo about a specific show, it will respond with how many unseen episodes are in the library.

Each of these three question types maps to an Intent. The first two don’t include any TV show names, but the third question form includes the name of any TV show that the user might say. For that Intent, we need to also define a Slot, which is the way that the show name gets passed to our app code.

The file alexa.intents contains the following contents, which describe our Intents in the format required by the ASK:

{

"intents":

[

{

"intent": "CheckNewShows",

"slots": []

},

{

"intent": "WhatNewShows",

"slots": []

},

{

"intent": "NewShowInquiry",

"slots": [{"name": "Show", "type": "LITERAL"}]

}

]

}

Next, we need to describe the ways in which a typical user might phrase the questions we support. As described in a previous blog post, these are called Utterances and you can quickly wind up with many, many different ways that people might say them. For our Kodi skill, there are almost 1,300 sample utterances. Fortunately, it’s pretty easy to express all these variations in a few lines and let the computer generate all the permutations.

The file alexa.utterances has the short-hand version of our sample utterances:

CheckNewShows (if there are/are there/do (we/i) have) (/any/some) new shows (/recorded) (/to watch) (/today/tonight)

CheckNewShows (if there is/is there/do (we/i) have) a new show (/recorded) (/to watch) (/today/tonight)

WhatNewShows what (/new) shows ((/do) (we/i/you) have/are (ready/waiting/recorded/there/on the (/media) server))

NewShowInquiry if (there is/we have) a new (/episode of) {((/The )Amazing Race/America's Got Talent/Arrow/(/The )Big Bang Theory/Blackish/(/The )Blacklist/Brooklyn Nine Nine/Doctor Who/Downton Abbey/The Flash/Gotham/How To Get Away With Murder/Modern Family/Person of Interest/Scandal)|Show} (/to watch/recorded/on the (/media) server)

NewShowInquiry if (there are/we have) any (/(/new )episodes of) {((/The )Amazing Race/America's Got Talent/Arrow/(/The )Big Bang Theory/Blackish/(/The )Blacklist/Brooklyn Nine Nine/Doctor Who/Downton Abbey/The Flash/Gotham/How To Get Away With Murder/Modern Family/Person of Interest/Scandal)|Show} (/to watch/recorded/on the (/media) server)

NewShowInquiry if (there is/we have) {((/The )Amazing Race/America's Got Talent/Arrow/(/The )Big Bang Theory/Blackish/(/The )Blacklist/Brooklyn Nine Nine/Doctor Who/Downton Abbey/The Flash/Gotham/How To Get Away With Murder/Modern Family/Person of Interest/Scandal)|Show} (/to watch/recorded/on the (/media) server)

When you paste the shorthand utterances into the Amazon Echo Utterance Expander tool, it will give you the output that you need to put into the sample utterances field in the Amazon Developer Console.

The Kodi Skill Source Code

As described above, the setup I’m using is Apache with mod_wsgi. So the source code to the web application part of the skill is in echo_handler.wsgi. In this article, I’m not going to go into the details of setting up the Apache configuration except to point out that Amazon will only send requests to your web server using https:// which means you must have SSL configured. Don’t forget that you might need to put your WSGI configuration into ssl.conf and not just into the non-SSL configuration sections.

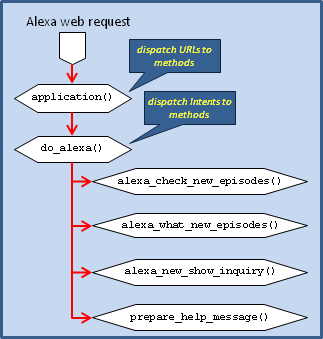

The basic wsgi dispatch stuff

The logic that handles the request and routes it to the appropriate functions for doing the real work is pretty straightforward. First, the request is dispatched to a function associated with the URL. Since the ASK always uses the same URL, there’s only one function (do_alexa) to dispatch to, although it’s configured twice in order to handle www.yoursite.com and www.yoursite.com/ (with and without the trailing /). You can handle additional URLs to do anything you like by adding to the HANDLERS list.

The do_alexa function parses the Alexa request and finds out which Intent is being invoked as well as handling the LaunchRequest when your user says only, “Alexa open <app name>”. Then a secondary dispatch is done to the function that knows how to handle the specified Intent. You can add more Intents in the code by adding to the INTENTS list, and updating your intent schema and sample utterances also, of course.

Handling each intent

The code for each of our intents basically starts out the same way. First the kody.py module is used to get information about the unwatched episodes, possibly further reduced to only the recently-added ones. Then the TV show names corresponding to those episodes are collected. This makes it pretty easy to answer each of the questions that the user may ask.

def alexa_check_new_episodes(slots):

# Responds to the question, "Are there any new shows to watch?"

# Get the list of unwatched EPISODES from Kodi

new_episodes = kodi.GetUnwatchedEpisodes()

# Find out how many EPISODES were recently added and get the names of the SHOWS

really_new_episodes = [x for x in new_episodes if x['dateadded'] &amp;amp;gt;= datetime.datetime.today() - datetime.timedelta(5)]

really_new_show_names = list(set([sanitize_show(x['show']) for x in really_new_episodes]))

In order to make the interaction with the Echo more interesting and enjoyable, much of the remaining code for each intent is there to build a natural-sounding response for each of several cases. The app even uses a bit of randomness to provide variety in the Echo’s responses.

if num_shows == 0:

# There's been nothing added to Kodi recently

answers = [

"You don't have any new shows to watch.",

"There are no new shows to watch.",

]

answer = random.choice(answers)

The last step performed by each intent handler is to take the response that should be spoken by the Echo and pass it to the build_alexa_response() method. This simple utility function packages it up in the format required by the ASK. When the response is returned to the Echo, the Echo answers the user’s question.

return build_alexa_response(answer)

Is The Echo A Good Fit For This Kind Of Integration?

Building and using this app has certainly made the Echo more useful. It’s easy to see how similar apps could be built for controlling other types of devices in your home automation setup. But it’s not without its drawbacks.

Pros

- The Echo provides high quality voice recognition. Rolling your own with something like CMU Sphinx would take more work and wouldn’t perform as well.

- The Alexa Skills Kit is free and only requires that you be able to build a web application that can process and return JSON data.

- The “wow” factor is pretty cool

Cons

- You have to have a web server and it has to be able to communicate with your home automation stuff. This effectively means you have to run an Internet-facing web server at home.

- Having to say, “Alexa ask Kodi whether…” is just a little too cumbersome and unnatural. Maybe Amazon can give the Echo multiple identities, so you could just say, “Kodi, do we have any new shows?”

- Every request goes outside the home to Amazon’s servers. Not everyone is comfortable with this, and it’s definitely not as fast as a purely in-home solution could be.

Love the tutorial, I responded to your comment on the Kodi forums but am wondering how extensive this can get, do you think it’s possible for the Echo to be able to handle playback and features like play/stop, and something along the lines of “play the next episode of whatever” for example. I’ve implemented this into my Android phone but am trying to understand the makeup of the Echo to see if this can be accomplished as well.

I used the Yatse app API for my phone, I just don’t know enough about the JSON data the Kodi outputs.

The JSON-RPC API for Kodi is definitely powerful enough to control playback in the ways you describe. When you’re ready to get into it, take a look at the

kodi.pyfile in my repository. You’ll see there’s already a method that tells Kodi to toggle Play/Pause. I usekodi.pyto stop, pause, play, and seek.Thanks for the reply Chris, definitely going to play around with this as it was one of the reasons I got an Echo…that and to nerd out with Phillips Hue lights.

While certainly not a huge programmer Im going to see what I can figure out, just needed a nudge to make sure the JSON data would work.

I also have my own skill and Have been doing a lot with the HA Bridge Hue emulator and the UDI ISY for lighting control. I was going to do Some work in my Home Theater, but am concerned that the music/audio in the theater will confuse the Echo. What if any is your experience using echo during a loud movie with heavy dialog or background music?

I think you’re likely to find that the Echo isn’t particularly good in loud, noisy environments. The Echo does pretty well listening when it’s own music is playing, but with the sound comes from outside the Echo, like in your home theater setup, it’ll definitely be effected.

Hey Chris, I’ve expanded your library quite a bit. Would you prefer I do a PR or just fork it? I modified it a little bit to run on Openshift automatically on a free server. I figured that way it’d be easier to setup for people who don’t want to fiddle with Apache.

Hey Joe,

Please feel free to submit a PR. I’d love to see it evolve with input from many angles.

Hey Chris, I ended up forking it since there was just almost too much changing. Here’s the update: https://github.com/m0ngr31/kodi-alexa

Thanks for all of these tutorials! I will most likely use the wemo method to do all my home things, but this will go a long way to help me with business things, such as integrating with SharePoint and my office 365 calendar.

One question though: could I run the wemo emulation, which I would use to drive the gpio pins and maybe IFTTT, as well as the web server for skills on a single raspberry pi? I don’t see why not, but what are your thoughts?

Nick, you could definitely run the wemo emulator on a Raspi. The wemo emulator is very lightweight in terms of resource needs.

Chris,

Thanks very much for sharing your work, was wondering if I can bug you. Attempting your setup, but having a hard time getting pass this error in my apache log. New to Python, not sure if you experience the same issue in your environment:

Changes I made to your scripts:

1. #!/usr/bin/env /usr/local/bin/python2.7 (both echo_handler.wsgi & kodi.py)

2. Updated KODI & PORT variable in kodi.py

Access log:

==> ha_access_log ha_error_log POST / HTTP/1.1

> Host: homeauto.net

> User-Agent: curl/7.43.0

> Accept: */*

> Content-Length: 81

> Content-Type: application/x-www-form-urlencoded

>

* upload completely sent off: 81 out of 81 bytes

< HTTP/1.1 500 Internal Server Error

< Date: Mon, 04 Jan 2016 04:17:28 GMT

< Server: Apache

< Content-Length: 506

< Connection: close

< Content-Type: text/html; charset=iso-8859-1

<

200 Error

Error

The server encountered an internal error or

misconfiguration and was unable to complete

your request.

Please contact the server administrator,

admin@localhost and inform them of the time the error occurred,

and anything you might have done that may have

caused the error.

More information about this error may be available

in the server error log.

* Closing connection 0

##################

Virtual server:

#homeauto.net virtual

DocumentRoot “/data/servers/ha_fe_apache/htdocs/homeauto”

ServerName homeauto.net

ServerAlias http://www.homeauto.net

WSGIDaemonProcess homeauto.net processes=2 threads=15 display-name=%{GROUP}

WSGIProcessGroup homeauto.net

WSGIScriptAlias / /data/servers/ha_fe_apache/htdocs/homeauto/ha/echo_handler.wsgi

# Logs

ErrorLog logs/ha_error_log

CustomLog logs/ha_access_log common

LogLevel info

Thanks in advance for any help with this dude.

~K

Hi Kevin,

What’s in your ha_error_log when this happens? It looks like your Apache wsgi configuration should be OK, so hopefully the error log will show the Python exception that you’re hitting.

BTW, if you’re going to change the first line, you can just make it “#!/usr/local/bin/python2.7”. The only reason to use /usr/local/env is when you want to use an unqualified path (like just “python”) and have it figure out the right executable to use. With a full path, you don’t need to use env.

Hi Chris,

Thanks for sharing fauxmo! It works just great on the Raspberry Pi. I have a question though, in fauxmo we use the words on and off, is it also possible to use other corresponding words like open and close?

For instance, I would like to use fauxmo as well to close our shutters. And using the on or off words for this particular example seems a bit weird but it works though. So can we use other words as well that can be defined in fauxmo?

Thanks in advance.

The words and phrases that will work are up to Amazon and are built into their service. In the past, I’ve tried “open” and it worked the same as “turn on”. I often say, “kill the dining room lights” and that works, too. So you’ll just have to experiment to see what words and phrases work.

However, no matter what the words are, they will always map to just two actions: on and off. You can, of course, do anything you want in the code in response to those two actions. When you get an “on” request, you can still open the garage door, or whatever.